Advanced LSF resource connector configuration on IBM Cloud - part III

Overview

This is the third instalment in a series of blogs covering advanced configuration topics for LSF resource connector. The earlier parts in the series can be found here: part I, part II.

As hinted in the closing of part II, this instalment will cover running Docker workloads on cloud instances which are dynamically managed by the LSF resource connector. The cloud environment in this example is IBM Cloud. To understand more about LSF resource connector, please read the earlier parts in the blog series.

LSF provides a framework for the management and execution of containerized workloads. It supports the following container runtimes: Docker, NVIDIA Docker, Shifter, Singularity, Podman and Enroot. The LSF documentation provides configuration steps for the supported container runtimes. Once configured, this capability is effectively transparent from the end user perspective.

Enable Docker support

First we need to enable support in LSF to run Docker containers. This is covered in detail in the LSF documentation and also something which I wrote about previously in the blog post Jack of all containers. The following steps will assume that the configuration steps have been completed.

LSF uses a Boolean resource named docker to identify hosts where the Docker runtime is available. This Boolean resource needs to be set on the compute nodes which are dynamically started by LSF resource connector.

In our example, an insecure Docker repository (using http) has been setup on the LSF manager host in the cluster with hostname lsf-mgmt-host. This will serve as the repository to host an OpenFOAM Docker container which has been prepared according to the procedures documented here. This blog will not go into detail on the creation of the insecure Docker registry. On the LSF management node, below is the output showing the available images. We see the OpenFoam image is available both locally and via http on port 5000.

# docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

localhost/openfoam/openfoam v1912_update bce4eb059f36 11 days ago 6.71 GB

localhost:5000/openfoam v1912_update bce4eb059f36 11 days ago 6.71 GB

docker.io/library/registry 2 6a3edb1d5eb6 10 months ago 26 MBNote An insecure Docker registry was used in this example for simplicity and is not recommended in production.

As was the case in part II of the blog series, the user_data.sh script will be used for multiple purposes here:

- Set docker Boolean variable on dynamic compute nodes

- Install Docker CE runtime and relevant support packages

- Add user(s) to the docker group (/etc/group)

- Configuration to point to insecure Docker registry on LSF management host lsf-mgmt-host

The following updates were made to the user_data.sh script. See comments inline for details.

$ diff -u4 ./user_data.sh ./user_data_sh.org

--- ./user_data.sh 2024-07-29 18:44:24.483146000 +0000

+++ ./user_data_sh.org 2024-07-11 14:34:47.688341000 +0000

@@ -29,25 +29,8 @@

#!/bin/bash

# shellcheck disable=all

-#

-# The following steps will add the Docker CE repo, install the latest Docker CE

-# version along with supporting packages. It will create a Docker Linux group

-# and add the lsfadmin user to that group. Furthermore, it will create

-# the /etc/docker/daemon.json file pointing to the insecure Docker registry

-# which has been configured on the LSF management host. Finally it will

-# start Docker. Note that the hostname lsf-mgmt-host for the insecure-registries

-# configuration of Docker needs to be updated accordingly.

-#

-yum-config-manager --add-repo https://download.docker.com/linux/rhel/docker-ce.repo -y

-dnf install htop hwloc hwloc-libs libevent stress stress-ng python36 docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y >> $logfile 2>&1

-ln -s /usr/bin/python3 /usr/bin/python

-groupadd docker >> $logfile 2>&1

-usermod -aG docker lsfadmin >> $logfile 2>&1

-echo -e "{\n \"insecure-registries\" : [ \”lsf-mgmt-host:5000\" ]\n }" >> /etc/docker/daemon.json

-systemctl start docker >> $logfile 2>&1

-

if [ "$compute_user_data_vars_ok" != "1" ]; then

echo 2>&1 "fatal: vars block is missing"

exit 1

fi

@@ -225,15 +208,8 @@

else

echo "Can not get instance ID" >> $logfile

fi

-#

-# Add the docker Boolean variable to the LSF_LOCAL_RESOURCES variable in

-# the lsf.conf file on the compute hosts. This will ensure that the host

-# is tagged with the docker variable.

-#

-sed -i "s/\(LSF_LOCAL_RESOURCES=.*\)\"/\1 [resource docker]\"/" $LSF_CONF_FILE >> $logfile 2>&1

-

#Update LSF Tuning on dynamic hosts

LSF_TUNABLES="etc/sysctl.conf"

echo 'vm.overcommit_memory=1' >> $LSF_TUNABLES

echo 'net.core.rmem_max=26214400' >> $LSF_TUNABLESApplication profile configuration

Next, we configure the LSF application profile for the OpenFOAM Docker container which has been loaded into the insecure Docker registry on the LSF management host. LSF application profiles can be used to define common job parameters for the same job type. This includes the container and container runtime definition. Learn more about LSF application profiles here.

On the LSF management node, the following application profile is defined in $LSF_ENVDIR/lsbatch/<clustername>/configdir/lsb.applications. Note that the hostname lsf-mgmt-host must point to the hostname where the insecure Docker repository has been setup in your environment. Additionally the volume specification -v /mnt/vpcstorage/data is specific to this environment and can be adjusted or removed as needed.

….

….

Begin Application

NAME = openfoam

DESCRIPTION = Example OpenFOAM application

CONTAINER = docker[image(lsf-mgmt-host:5000/openfoam:v1912_update) \

options(--rm --net=host --ipc=host \

--cap-add=SYS_PTRACE \

-v /etc/passwd:/etc/passwd \

-v /etc/group:/etc/group \

-v /mnt/vpcstorage/data:/mnt/vpcstorage/data \

) starter(root)]

EXEC_DRIVER = context[user(lsfadmin)] \

starter[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-starter.py] \

controller[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-control.py] \

monitor[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-monitor.py]

End Application

….

….In order to make the above change take effect, run the badmin reconfig command as the defined LSF administrator. The LSF bapp command can be used to check the newly defined configuration for LSF application profile openfoam.

$ badmin reconfig

Checking configuration files ...

No errors found.

Reconfiguration initiated

$ bapp -l openfoam

APPLICATION NAME: openfoam

-- Example OpenFOAM application

STATISTICS:

NJOBS PEND RUN SSUSP USUSP RSV

0 0 0 0 0 0

PARAMETERS:

CONTAINER: docker[image(lsf-mgmt-host:5000/openfoam:v1912_update) options(--rm --net=host --ipc=host --cap-add=SYS_PTRACE -v /etc/passwd:/etc/passwd -v /etc/group:/etc/group -v /mnt/vpcstorage/data:/mnt/vpcstorage/data ) starter(root)]

EXEC_DRIVER:

context[user(lsfadmin)]

starter[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-starter.py]

controller[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-control.py]

monitor[/opt/ibm/lsf_worker/10.1/linux3.10-glibc2.17-x86_64/etc/docker-monitor.py]Submitting workload

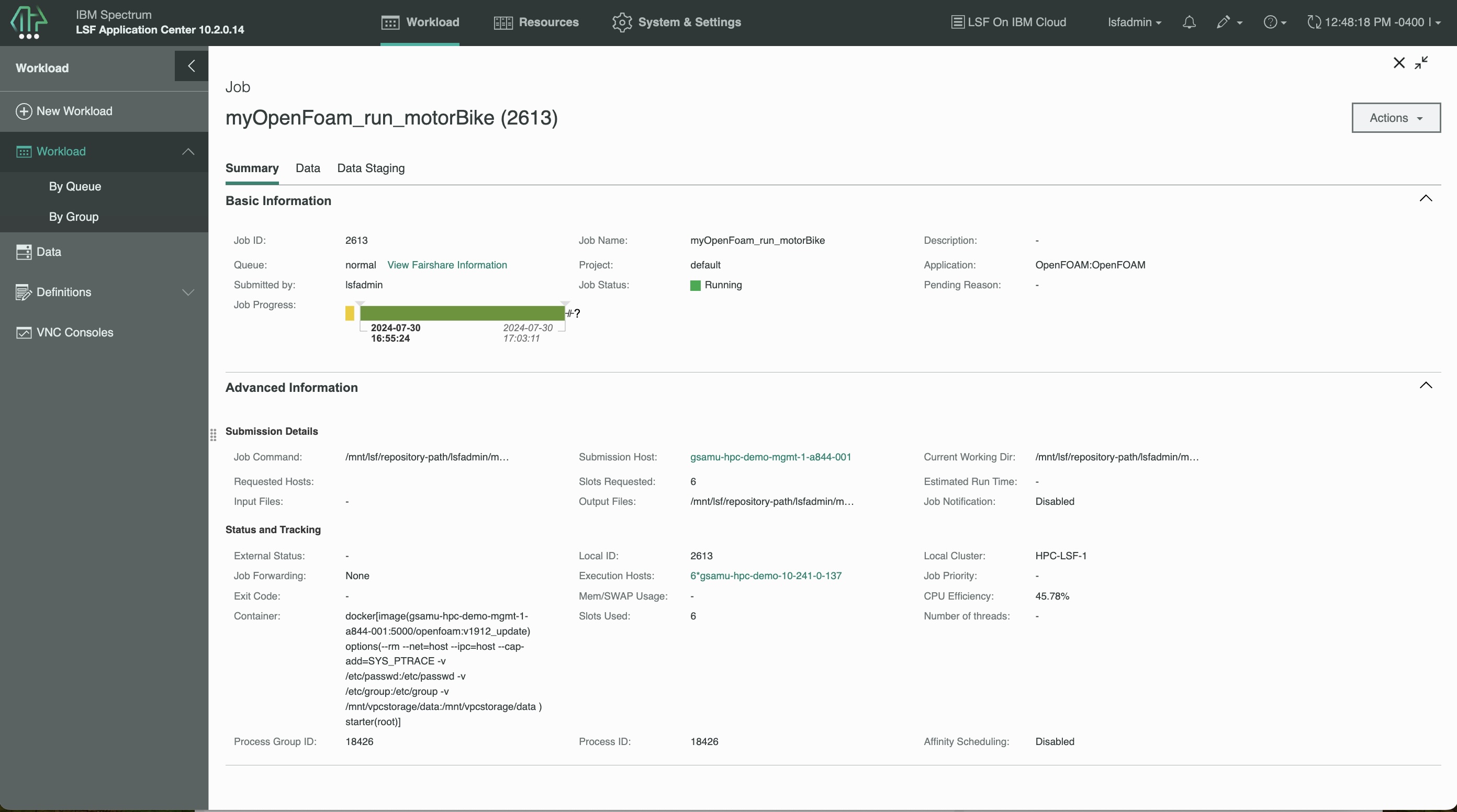

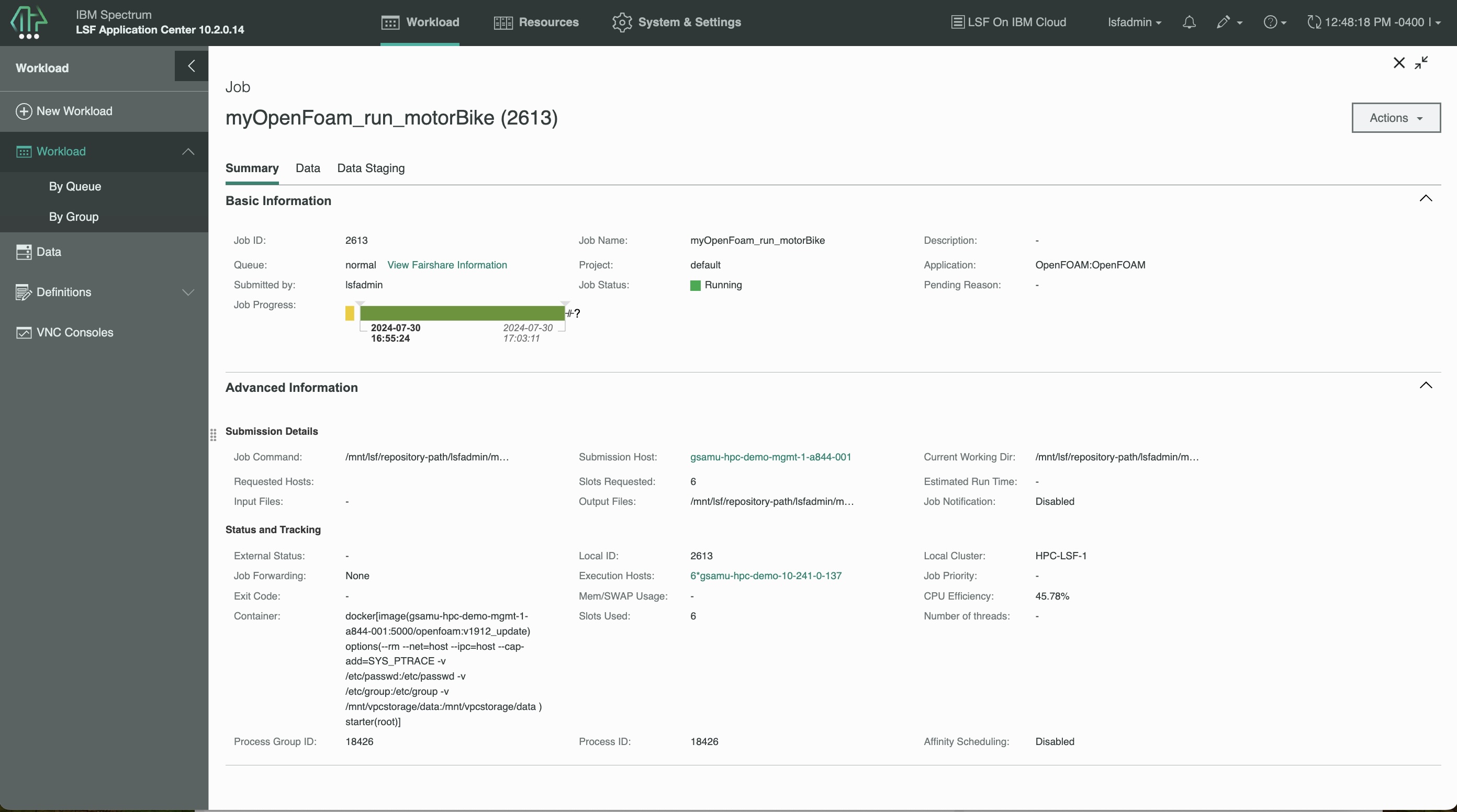

With all of the configuration in place, it’s now time to submit an OpenFOAM workload. For this, LSF Application Center is used. The OpenFOAM application template is available on the Spectrum Computing github here. The OpenFOAM application template is configured to use the openfoam application profile. An example job is submitted and it runs to completion successfully. In the screenshot below, we see that the openfoam Docker container is executed.

The LSF bjobs and bhist output from the job follows below:

$ bjobs -l 2613

Job <2613>, Job Name <myOpenFoam_run_motorBike>, User <lsfadmin>, Project <defa

ult>, Application <openfoam>, Status <RUN>, Queue <normal>

, Command </mnt/lsf/repository-path/lsfadmin/myOpenFoam_ru

n_1722358195200AuWDY/motorBike/bsub.myOpenFoam_run>, Share

group charged </lsfadmin>

Tue Jul 30 16:49:55: Submitted from host <gsamu-hpc-demo-mgmt-1-a844-001>, CWD

</mnt/lsf/repository-path/lsfadmin/myOpenFoam_run_17223581

95200AuWDY/motorBike>, Specified CWD </mnt/lsf/repository-

path/lsfadmin/myOpenFoam_run_1722358195200AuWDY/motorBike>

, Output File </mnt/lsf/repository-path/lsfadmin/myOpenFoa

m_run_1722358195200AuWDY/motorBike/output.lsfadmin.txt>, E

rror File </mnt/lsf/repository-path/lsfadmin/myOpenFoam_ru

n_1722358195200AuWDY/motorBike/error.lsfadmin.txt>, Notify

when job begins/ends, 6 Task(s), Requested Resources <spa

n[hosts=1]>;

Tue Jul 30 16:55:23: Started 6 Task(s) on Host(s) <gsamu-hpc-demo-10-241-0-137>

<gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10-241-0-137

> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10-241-0-1

37> <gsamu-hpc-demo-10-241-0-137>, Allocated 6 Slot(s) on

Host(s) <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10-2

41-0-137> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10

-241-0-137> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-

10-241-0-137>, Execution Home </home/lsfadmin>, Execution

CWD </mnt/lsf/repository-path/lsfadmin/myOpenFoam_run_1722

358195200AuWDY/motorBike>;

Tue Jul 30 17:03:33: Resource usage collected.

The CPU time used is 1411 seconds.

MEM: 928 Mbytes; SWAP: 0 Mbytes; NTHREAD: 41

PGID: 18426; PIDs: 18426 18427 18428 20088

PGID: 20374; PIDs: 20374 20388 20800 21385

PGID: 21389; PIDs: 21389

PGID: 21390; PIDs: 21390

PGID: 21391; PIDs: 21391

PGID: 21392; PIDs: 21392

PGID: 21393; PIDs: 21393

PGID: 21394; PIDs: 21394

MEMORY USAGE:

MAX MEM: 982 Mbytes; AVG MEM: 422 Mbytes; MEM Efficiency: 0.00%

CPU USAGE:

CPU PEAK: 5.89 ; CPU PEAK DURATION: 63 second(s)

CPU AVERAGE EFFICIENCY: 42.81% ; CPU PEAK EFFICIENCY: 98.15%

SCHEDULING PARAMETERS:

r15s r1m r15m ut pg io ls it tmp swp mem

loadSched - - - - - - - - - - -

loadStop - - - - - - - - - - -

RESOURCE REQUIREMENT DETAILS:

Combined: select[(docker) && (type == any)] order[r15s:pg] span[hosts=1]

Effective: select[(docker) && (type == any)] order[r15s:pg] span[hosts=1] $ bhist -l 2613

Job <2613>, Job Name <myOpenFoam_run_motorBike>, User <lsfadmin>, Project <defa

ult>, Application <openfoam>, Command </mnt/lsf/repository

-path/lsfadmin/myOpenFoam_run_1722358195200AuWDY/motorBike

/bsub.myOpenFoam_run>

Tue Jul 30 16:49:55: Submitted from host <gsamu-hpc-demo-mgmt-1-a844-001>, to Q

ueue <normal>, CWD </mnt/lsf/repository-path/lsfadmin/myOp

enFoam_run_1722358195200AuWDY/motorBike>, Specified CWD </

mnt/lsf/repository-path/lsfadmin/myOpenFoam_run_1722358195

200AuWDY/motorBike>, Output File </mnt/lsf/repository-path

/lsfadmin/myOpenFoam_run_1722358195200AuWDY/motorBike/outp

ut.lsfadmin.txt>, Error File </mnt/lsf/repository-path/lsf

admin/myOpenFoam_run_1722358195200AuWDY/motorBike/error.ls

fadmin.txt>, Notify when job begins/ends, 6 Task(s), Reque

sted Resources <span[hosts=1]>;

Tue Jul 30 16:55:23: Dispatched 6 Task(s) on Host(s) <gsamu-hpc-demo-10-241-0-1

37> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10-241-0

-137> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-10-241

-0-137> <gsamu-hpc-demo-10-241-0-137>, Allocated 6 Slot(s)

on Host(s) <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-demo-

10-241-0-137> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-dem

o-10-241-0-137> <gsamu-hpc-demo-10-241-0-137> <gsamu-hpc-d

emo-10-241-0-137>, Effective RES_REQ <select[(docker) && (

type == any)] order[r15s:pg] span[hosts=1] >;

Tue Jul 30 16:55:23: Starting (Pid 18426);

Tue Jul 30 16:55:24: Running with execution home </home/lsfadmin>, Execution CW

D </mnt/lsf/repository-path/lsfadmin/myOpenFoam_run_172235

8195200AuWDY/motorBike>, Execution Pid <18426>;

Tue Jul 30 17:04:01: Done successfully. The CPU time used is 1535.1 seconds;

Tue Jul 30 17:04:02: Post job process done successfully;

MEMORY USAGE:

MAX MEM: 982 Mbytes; AVG MEM: 431 Mbytes; MEM Efficiency: 0.00%

CPU USAGE:

CPU PEAK: 5.92 ; CPU PEAK DURATION: 63 second(s)

CPU AVERAGE EFFICIENCY: 50.67% ; CPU PEAK EFFICIENCY: 98.68%

Summary of time in seconds spent in various states by Tue Jul 30 17:04:02

PEND PSUSP RUN USUSP SSUSP UNKWN TOTAL

328 0 518 0 0 0 846 Conclusion

The user_data.sh script of LSF resource connector allows a high degree of customization for cloud compute resources that dynamically join the LSF cluster. We’ve demonstrated how it can be used to tag cloud compute resources with a specific LSF Boolean resource in addition to the ability to install specific packages and do configuration customization. This is a simplified example, but illustrates this point.